Weekly Newsletter - October 11, 2024

AI Breakthroughs Take Center Stage in Nobel Prizes

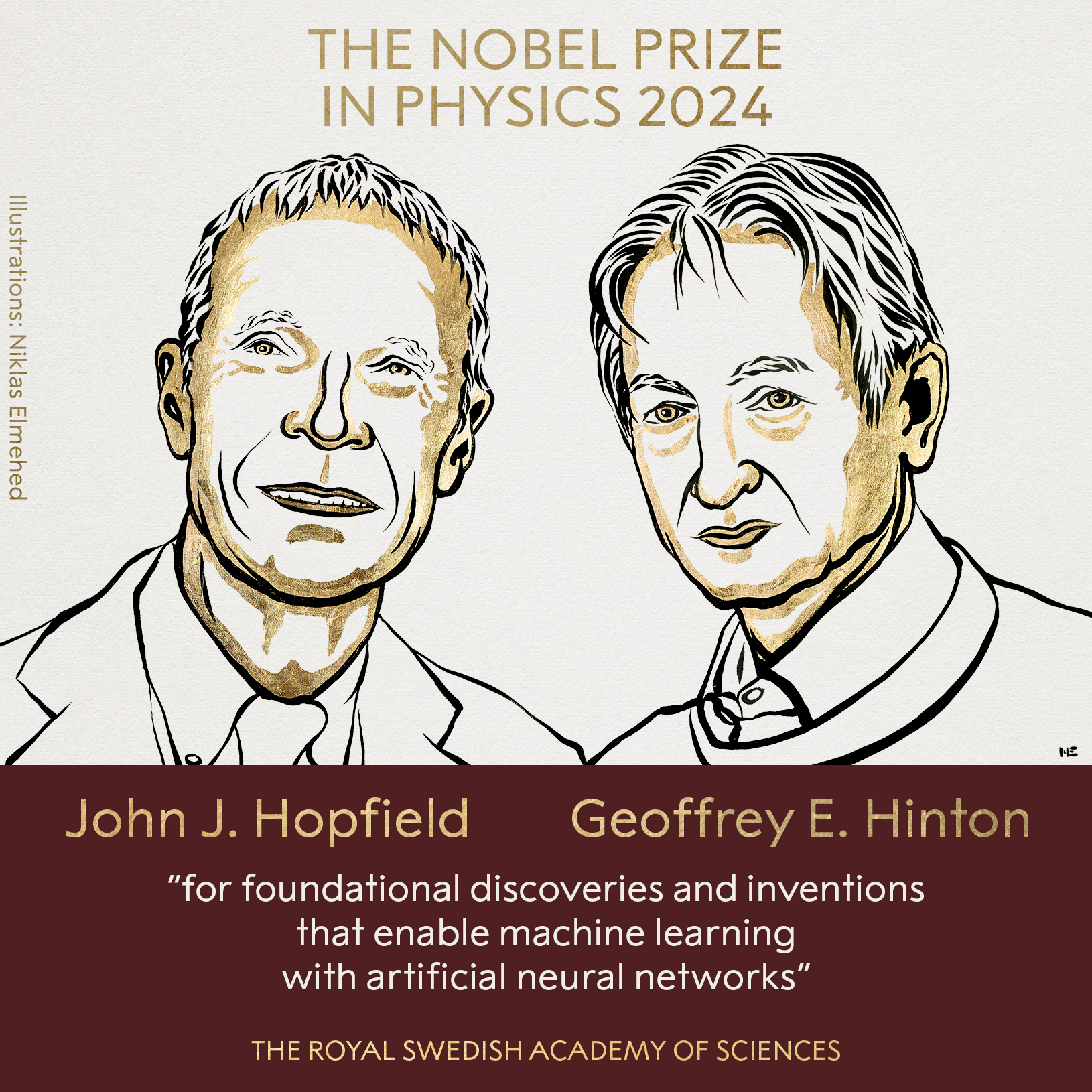

This year’s Nobel Prizes have put a spotlight on AI’s transformative impact across scientific disciplines. The Physics prize, awarded to Geoffrey Hinton and John Hopfield, celebrates their groundbreaking work on artificial neural networks – the very foundation of today’s deep learning revolution.

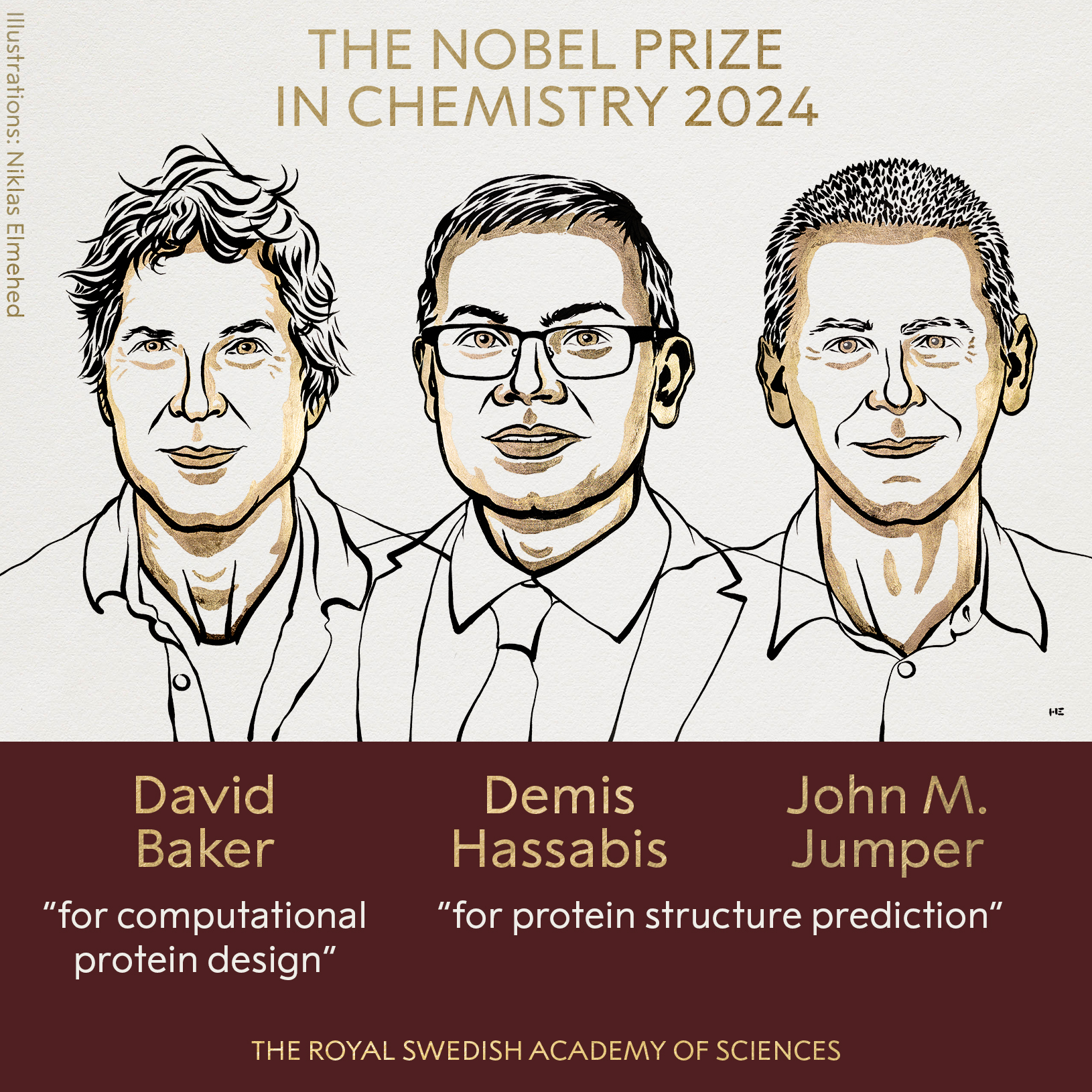

Meanwhile, the Chemistry prize went to Demis Hassabis, John Jumper, and David Baker for their development of AlphaFold. This AI system has revolutionized protein structure prediction, opening new frontiers in drug discovery and biotechnology.

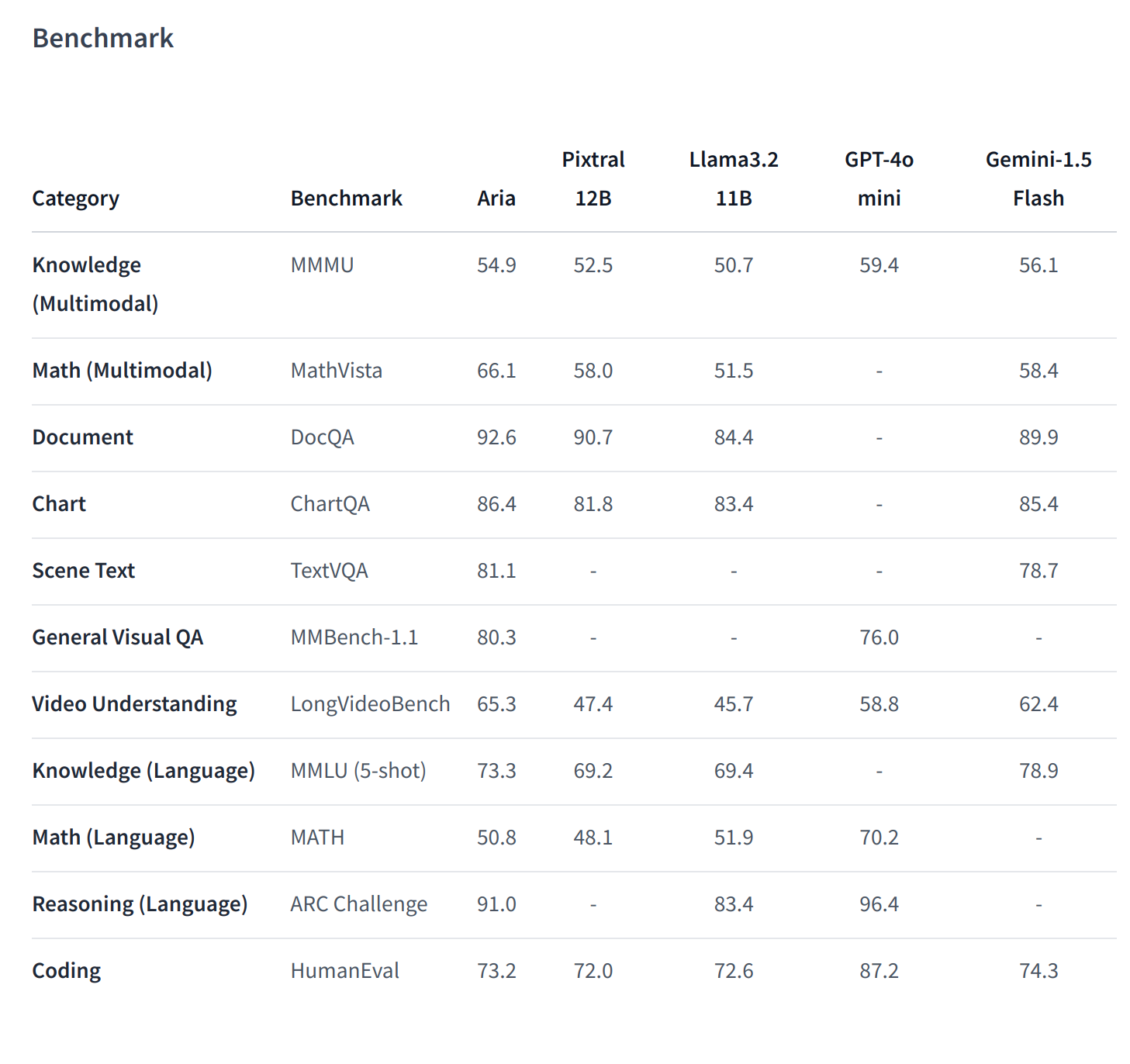

ARIA: A New Open-Source AI Powerhouse

The AI community is buzzing about ARIA, a newly released open-source multimodal model. With its Apache 2.0 license and impressive capabilities, ARIA is poised to shake up the field:

- 25.3 billion parameters (3.9 billion active)

- 64K token context window

- Handles text, images, audio, and video

- Innovative Mixture-of-Experts (MoE) architecture

- Early benchmarks show it outperforming several established models

OpenAI’s o1 - Meta prompt release

Link to the Playground meta prompt guide

OpenAI Playground’s New Generate Button: Streamlining AI Development The Playground has introduced an exciting new feature: the Generate button. This tool is designed to simplify the process of creating prompts, functions, and schemas. Here’s how it works:

Prompts: Uses meta-prompts incorporating best practices to generate or improve prompts. Schemas: Employs meta-schemas to produce valid JSON and function syntax.

The Generate button uses two main approaches:

- Meta-prompts for prompt generation and improvement

- Meta-schemas for producing valid JSON and function syntax

While currently relying on these methods, there are plans to potentially integrate more advanced techniques like DSPy and “Gradient Descent” in the future.

Key features:

- Generates prompts and schemas from task descriptions

- Uses specific meta-prompts for different output types (e.g., audio)

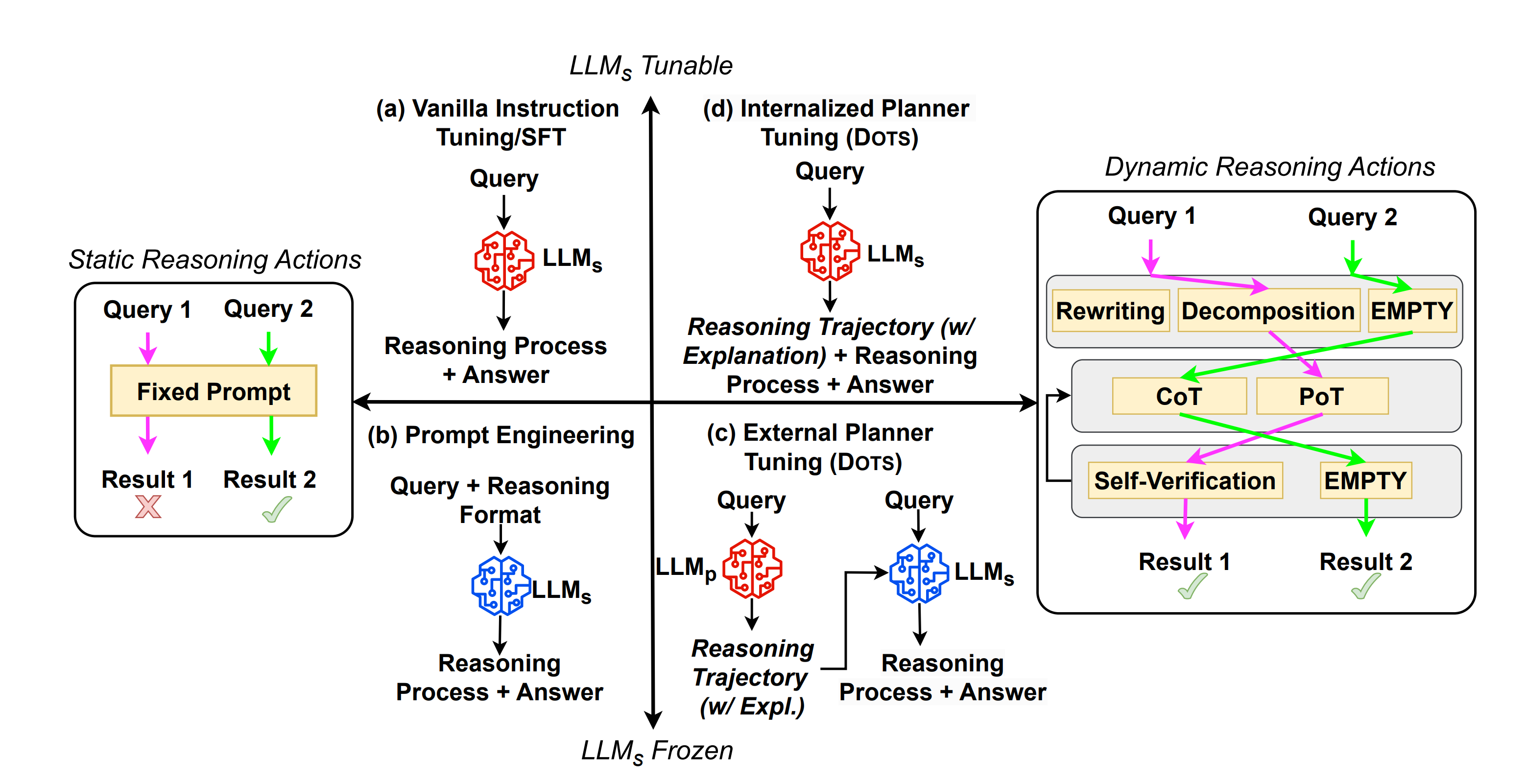

Fine-tuning LLMs: Dynamic Reasoning with DOTS

Researchers continue to push the boundaries of LLM fine-tuning, with a recent breakthrough in enhancing reasoning capabilities. A new paper introduces DOTS (Dynamic Optimal reasoning Trajectories Search), an innovative approach to fine-tuning LLMs for improved reasoning:

Key features of DOTS:

- Tailors reasoning strategies to specific questions and the LLM’s capabilities

- Defines atomic reasoning action modules that can be combined into various trajectories

- Searches for optimal action trajectories through iterative exploration and evaluation

- Trains LLMs to plan reasoning trajectories for unseen questions

The DOTS method offers two learning paradigms:

- Fine-tuning an external LLM as a planner to guide the task-solving LLM

- Directly fine-tuning the task-solving LLM with internalized reasoning action planning

Results from experiments across eight reasoning tasks show that DOTS consistently outperforms static reasoning techniques and vanilla instruction tuning. Notably, this approach enables LLMs to adjust their computation based on problem complexity, allocating deeper thinking to more challenging problems.

This development addresses longstanding challenges in LLM fine-tuning, such as:

- Overcoming the limitations of static, predefined reasoning actions

- Adapting to the specific characteristics of each question

- Optimizing performance for the inherent capabilities of different LLMs

As the field continues to evolve, approaches like DOTS promise to significantly enhance the reasoning capabilities of large language models, opening new possibilities for AI applications across various domains.

Quantization: Making AI More Accessible

The push for efficient AI is driving innovative quantization techniques:

- BitNet (Microsoft): Uses 1-bit weights and quantized activations link to the paper

- AdderLM: Replaces floating-point multiplication with integer addition link to the paper

These methods aim to reduce computational resources while maintaining performance, potentially bringing AI to more resource-constrained devices.

Tools and Frameworks: Empowering Developers

New tools are streamlining AI development workflows:

- Aider v0.59.0: Enhances shell-style auto-complete and YAML config, link to the repo

- OpenRouter: Improves LLM routing and API management link to the website

Recent Papers of Interest

“Benchmarking Agentic Workflow Generation” link to the paper

- Introduces WorFBench and WorFEval for evaluating LLM workflow generation

- Reveals gaps between sequence and graph planning capabilities in LLMs

“Towards Self-Improvement of LLMs via MCTS” link to the paper

- Proposes AlphaLLM-CPL for more effective MCTS behavior distillation

- Shows promising results in improving LLM reasoning capabilities

“Named Clinical Entity Recognition Benchmark” link to the paper

- Establishes a standardized platform for assessing language models in healthcare NLP tasks

- Utilizes OMOP Common Data Model for consistency across datasets