AI Newsletter - Nov 2

highlights:

- Smaller models are better.

- Layer skip decoding is better than beam search.

- Tokenformer is a better architecture for scaling language models.

- Robots can sense the touch using a new sensors developed by meta.

- OpenAI and Google are trading punches with new releases within minutes of each other.

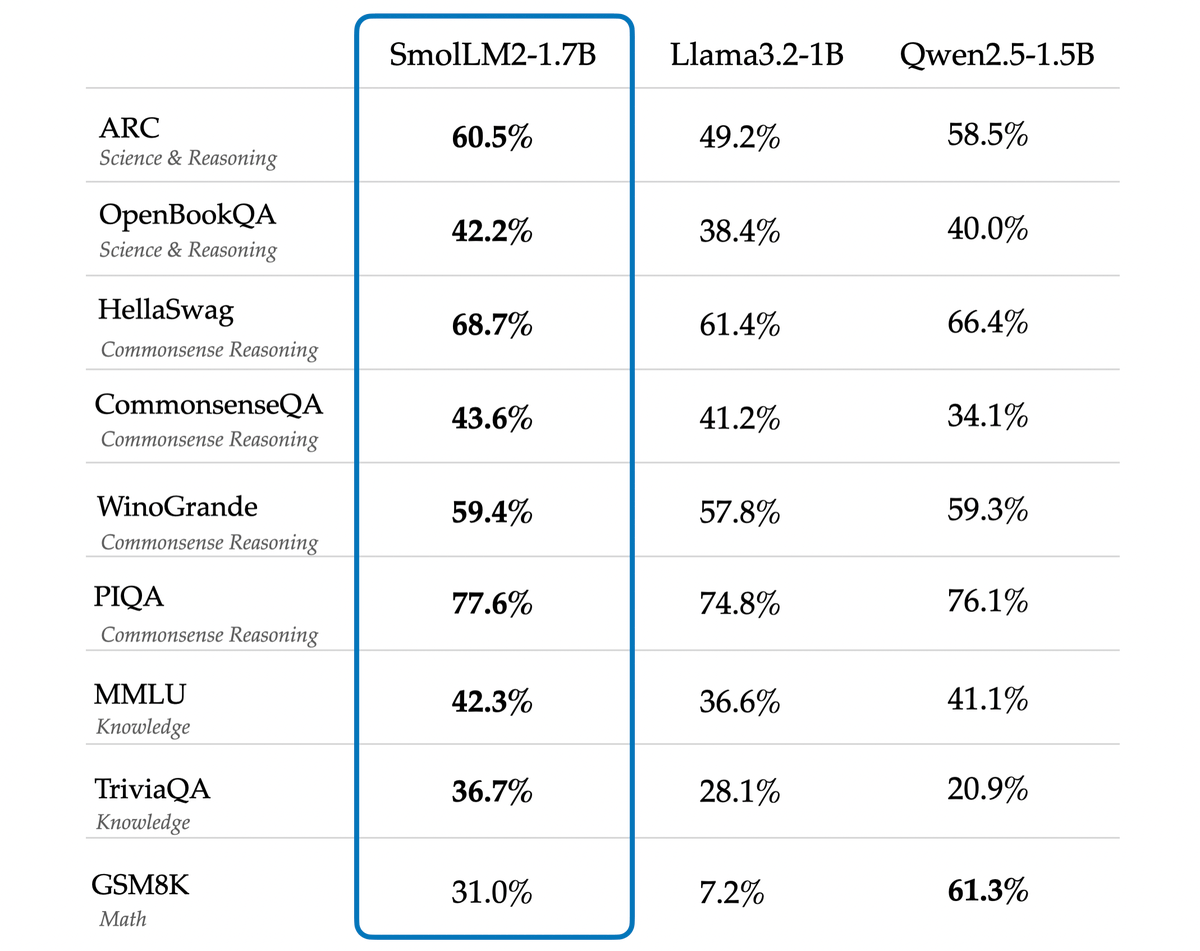

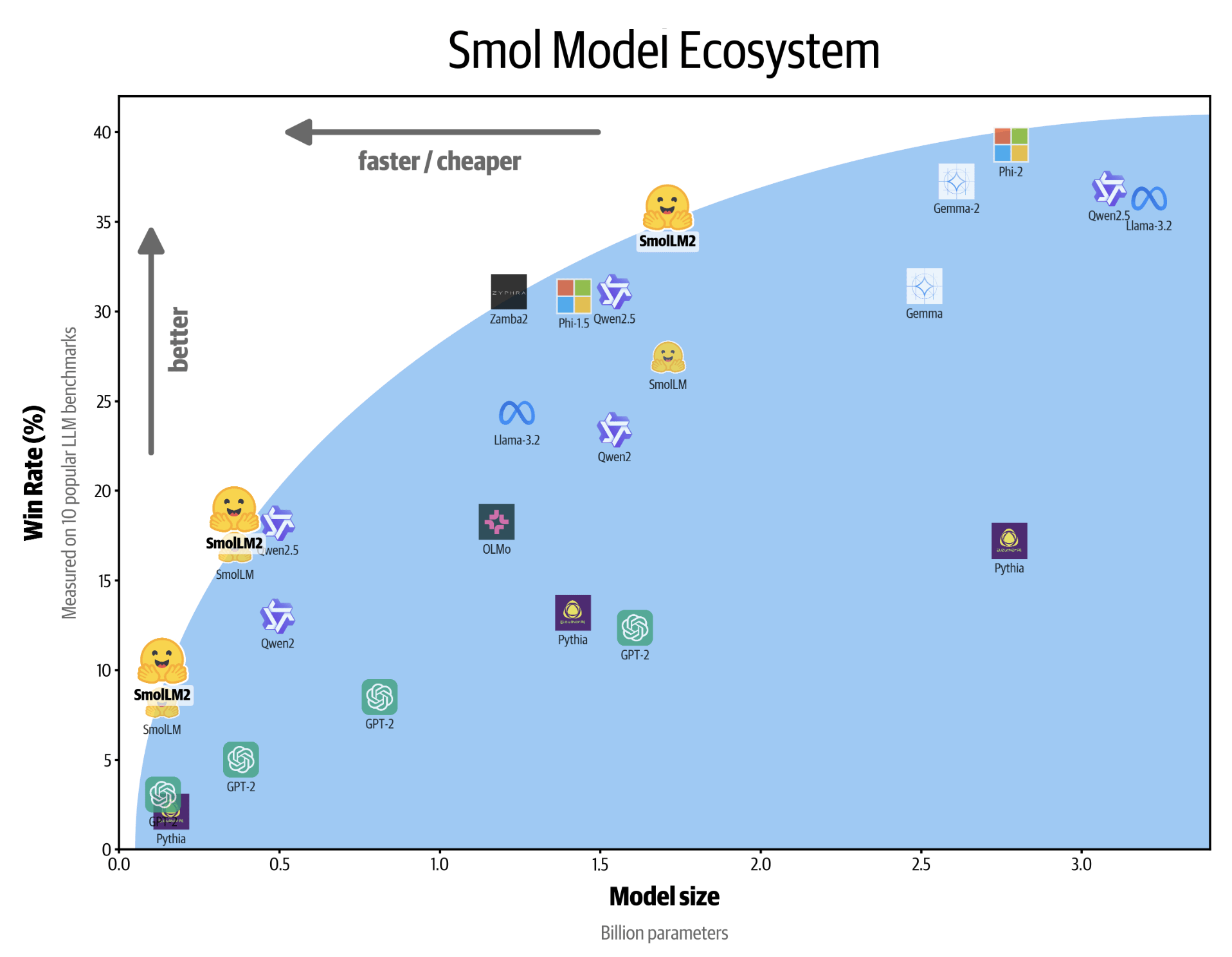

SmolLM 2: Smaller Models, Bigger Impact

Hugging Face has unveiled SmolLM v2, a groundbreaking release pushing the boundaries of small language models. The collection features three models (135M, 360M, and 1.7B parameters) that outperform larger competitors like Qwen and Llama 3.2 across various benchmarks. Released under the Apache 2 license, these models are specifically designed for edge computing and in-browser applications.

link to the model: https://huggingface.co/collections/HuggingFaceTB/smollm2-6723884218bcda64b34d7db9

Here is an overview of the small models and how this models fit in.

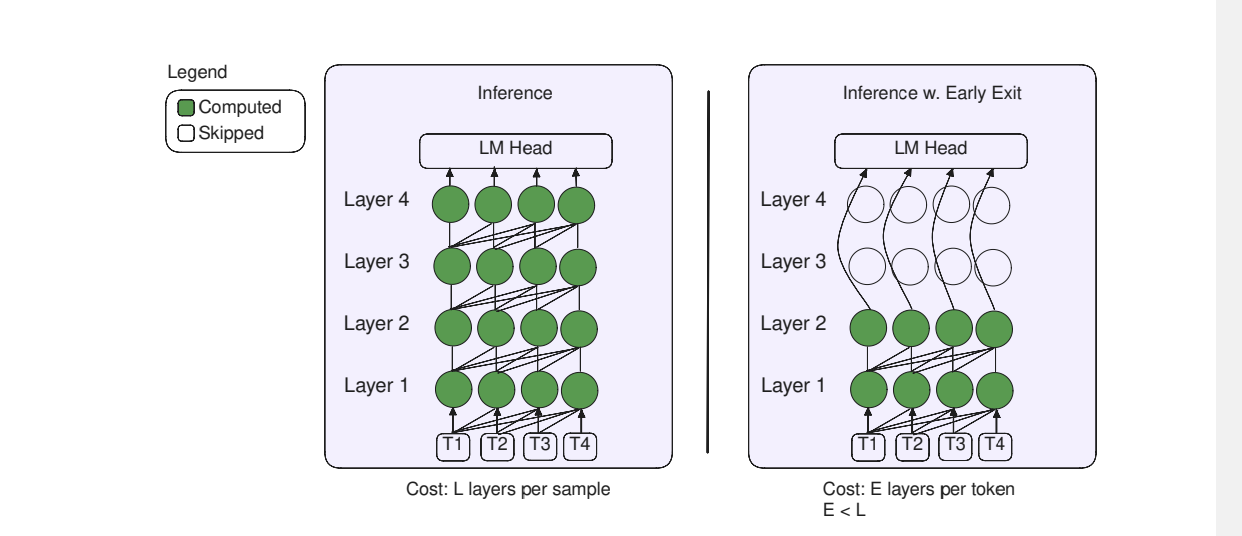

Layer Skip: Meta’s Breakthrough in LLM Acceleration

Meta has introduced a revolutionary decoding method called Layer Skip that significantly improves LLM performance. Key highlights:

- Achieves up to 2.16x speedup for summarization tasks

- Delivers 1.82x acceleration for coding tasks

- Provides 2.0x improvement in semantic parsing

- Operates by executing select layers and using subsequent ones for verification

- Released with inference code and fine-tuned checkpoints for Llama 3, Llama 2, and Code Llama link to the paper: https://arxiv.org/abs/2404.16710

link for more information: https://arxiv.org/abs/2404.16710

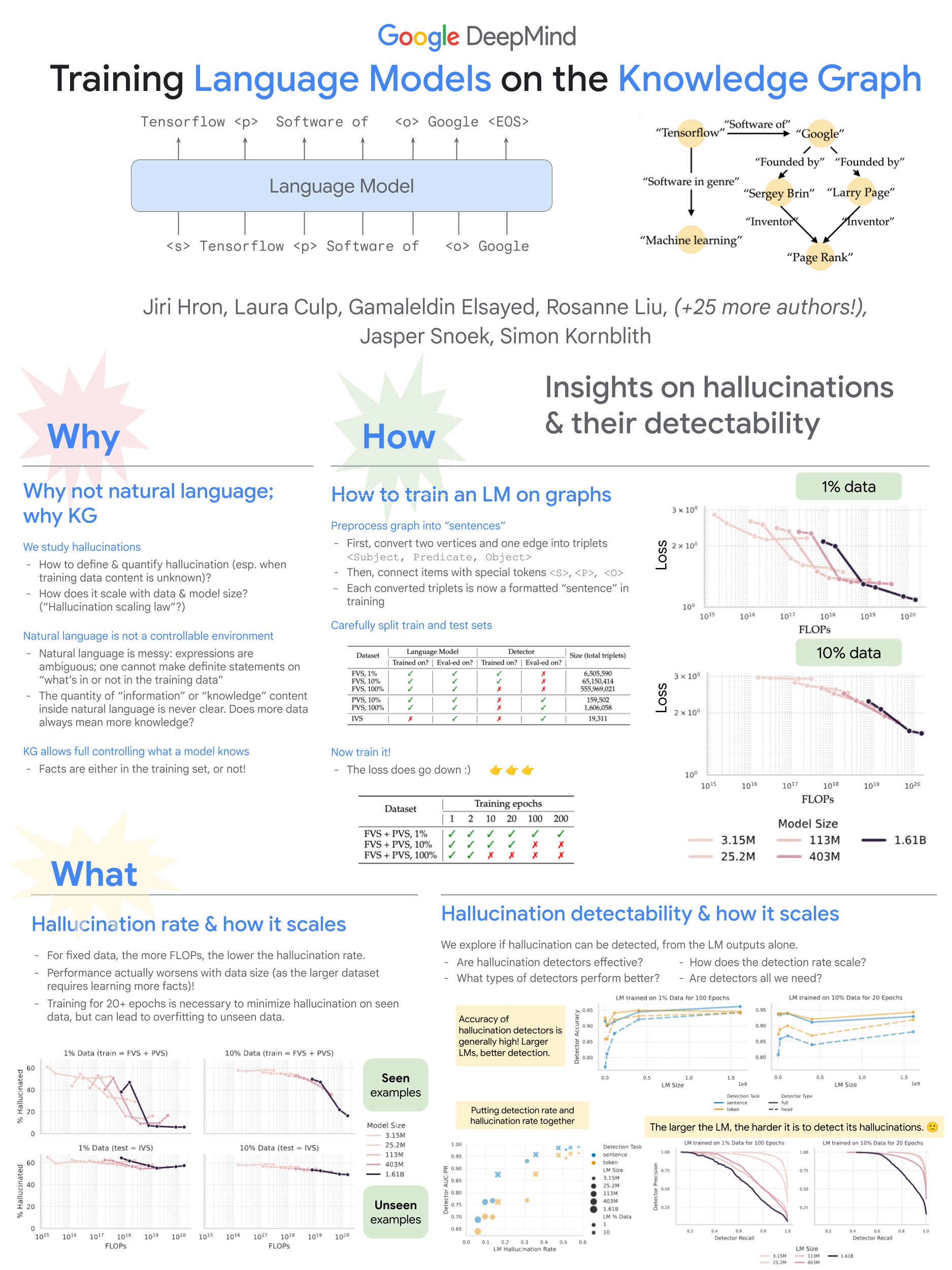

Knowledge Graphs: A New Approach to Combat Hallucinations

Recent research explores using knowledge graphs for LLM training, offering fascinating insights into hallucination reduction:

- Larger models combined with extended training periods show reduced hallucination rates

- Achieving a ≤5% hallucination rate demands significantly more computing power than previously estimated

- Interesting discovery: as models grow larger, their hallucinations become more difficult to detect

- Provides clearer boundaries and control over knowledge incorporation during training link for mode information: https://x.com/savvyRL/status/1844073150025515343

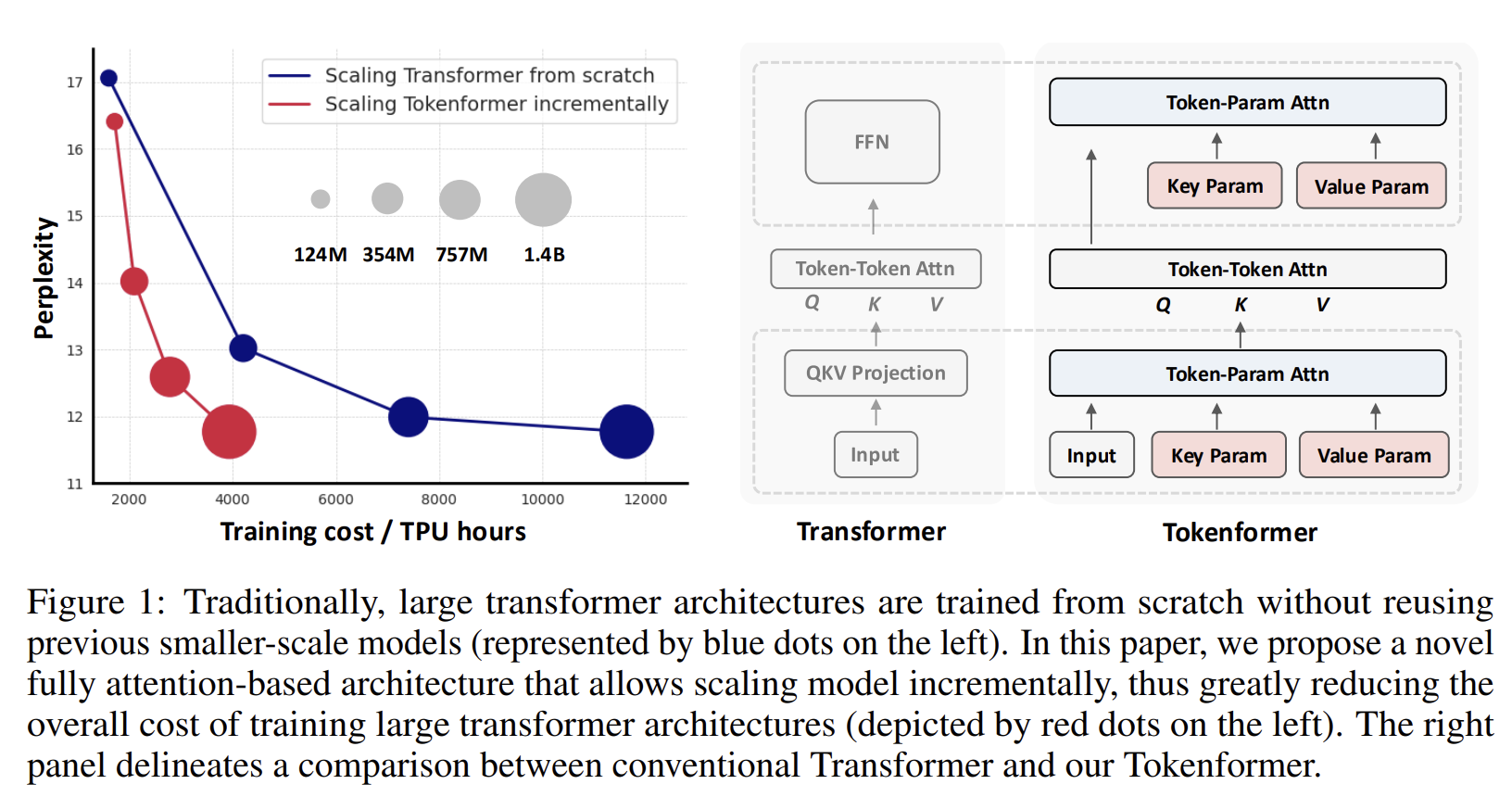

Tokenformer: Revolutionizing Language Model Architecture

A new architecture has emerged to tackle the scaling challenges of traditional transformers:

- Introduces token-parameter attention layer to replace linear projections

- Enables progressive scaling from 124M to 1.4B parameters

- Eliminates need for complete retraining when scaling up

- Achieves performance comparable to traditional transformers

- Available as open-source with complete code and model access

link to the paper: https://arxiv.org/abs/2410.23168

Meta’s Touch-Sensitive Robotics Breakthrough

Meta FAIR has announced significant advances in robotics technology:

- New developments in touch perception and dexterity

- Partnerships with GelSight Inc and Wonik Robotics

- Focus on commercializing tactile sensing innovations

- Commitment to fostering an open ecosystem for AI development

link for more information: https://ai.meta.com/blog/fair-robotics-open-source/?utm_source=twitter&utm_medium=organic_social&utm_content=video&utm_campaign=fair

Search Integration Face-off: Google vs OpenAI

Google’s Grounding with Search

Google has launched search grounding for Gemini models, offering:

- Reduced hallucinations through factual grounding

- Real-time information access

- Enhanced trustworthiness with supporting links

- Richer information through Google Search integration

OpenAI’s ChatGPT Web Search

OpenAI has enhanced ChatGPT with improved web search capabilities:

- Faster, more timely answers

- Direct links to relevant web sources

- Improved search accuracy and relevance